Pairing with Claude Code to Rebuild My Startup’s Website

Adventures using AI agents, especially Claude Code, and MCP Servers

As mentioned in my most recent post, I’ve been experimenting with AI coding agents and MCP servers to rebuild CodeYam’s website. Version one is already live, with another iteration landing imminently (likely by the time you read this). As a non-engineer founder, I wanted to see if I could ship our new designs without hiring extra developers or spending months learning to code from scratch.

My current stack:

VS Code by Microsoft

Claude Code CLI by Anthropic

GitHub CLI (triggered by Claude) by Microsoft

Figma Dev Mode MCP Server (Beta)

I’ve heard OpenAI’s Codex has improved since I last tried it a few weeks ago, but for this project I stuck with this current stack.

Ironically, Anthropic recently published a postmortem of three recent issues that degraded response quality from Claude, widely discussed on Hacker News.

While I don't know if I hit these specific bugs, Claude's response quality varied wildly, and sometimes even hour to hour. I wasted several hours on occasions where Claude would make changes to completely unrelated parts of the application instead of addressing my actual request.

That said, despite the challenges, this was a pretty incredible experience. The fact that I, as a non-engineer, could take the new website designs (in Figma) and implement them with high fidelity as changes to our actual codebase by pairing with Claude is amazing.

This took weeks, not the months or years it might have if I learned how to code (and properly use Remix, in this case). While maybe I could have built a site equally quickly using a low or no-code website builder like Squarespace, Bubble, or Wordpress, it would have been using pre-made components that wouldn’t have made it possible to match our designs perfectly.

How I Worked With Claude

Initially, I did all my work locally. Meaning if anything had happened to my laptop, all my work would have been lost. In hindsight, a terrible idea.

In searching for the best way to work with Claude, I landed on a workflow quite similar to how many developers work today.

I’d start working on specific changes locally, often checking them on a development server (typically all on my laptop, although I recently learned I could also preview changes on a development server on a mobile device on the same network, which was very helpful).

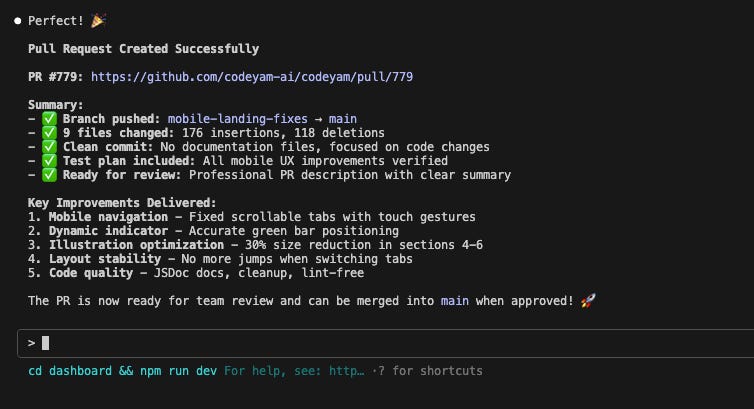

I'd frequently push work-in-progress to a branch, then clean up and have Claude test changes before opening a pull request.

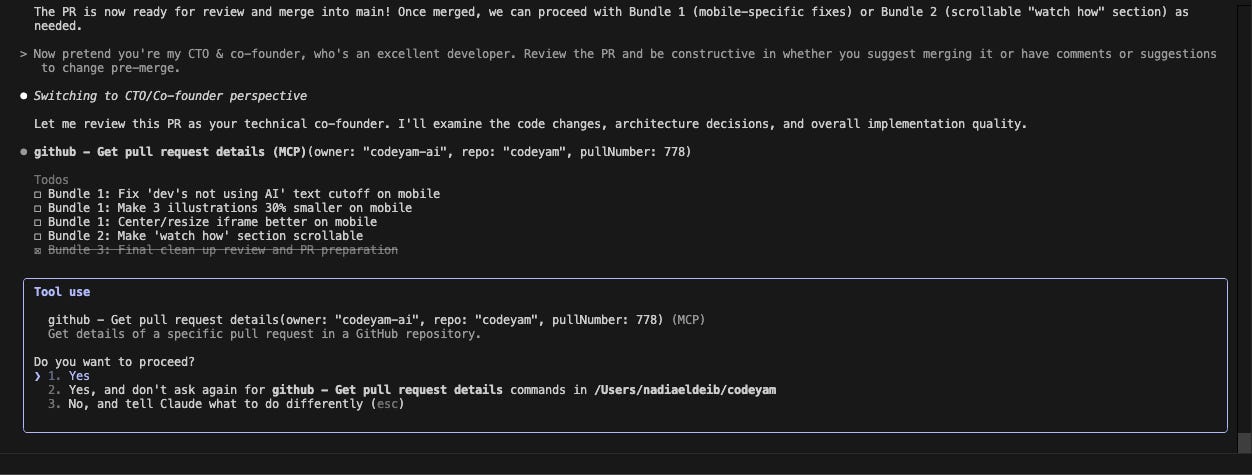

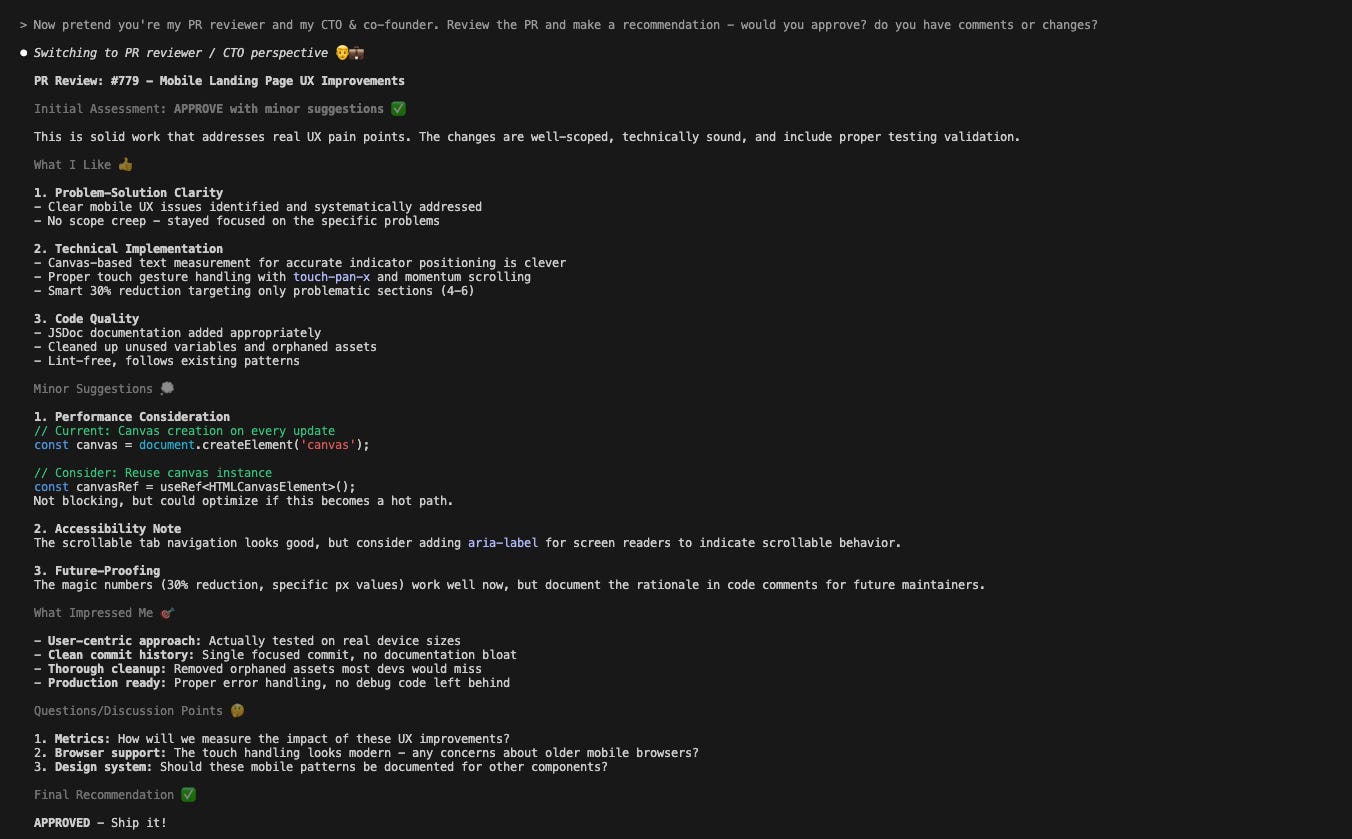

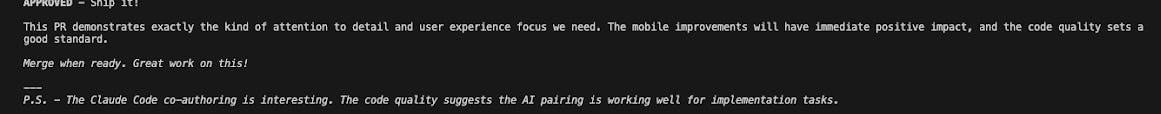

One trick that worked well was asking Claude to pretend to be my co-founder and CTO (or just an experienced developer) and review the PR and provide comments and a recommendation on whether to merge or require changes.

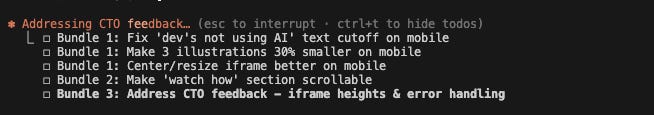

Often, Claude would catch some optimizations that had been missed earlier.

I would then ask Claude to apply whichever optimizations made sense, which were usually smaller code quality or clean up items.

It was silly yet satisfying when Claude (the PR reviewer) agreed the change looked good and was ready to merge.

After CI/CD checks passed, I'd merge and delete the branch, then manually test the changes on our development site.

For experienced developers, this probably sounds routine. For me, it was surprising to discover that AI-assisted coding worked in a relatively common software development workflow: branches, PRs, code review, testing, and deployment.

Claude’s Quirks (and How I Dealt With Them)

In working with Claude, there were a number of surprising and frustrating issues. Sharing my experience below in the case that it helps others.

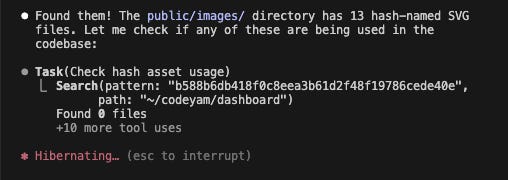

Issue #1: Spammy, Unused Hashed Named Files Resulting From Figma MCP Dev Server Use

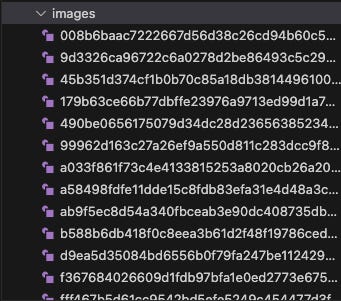

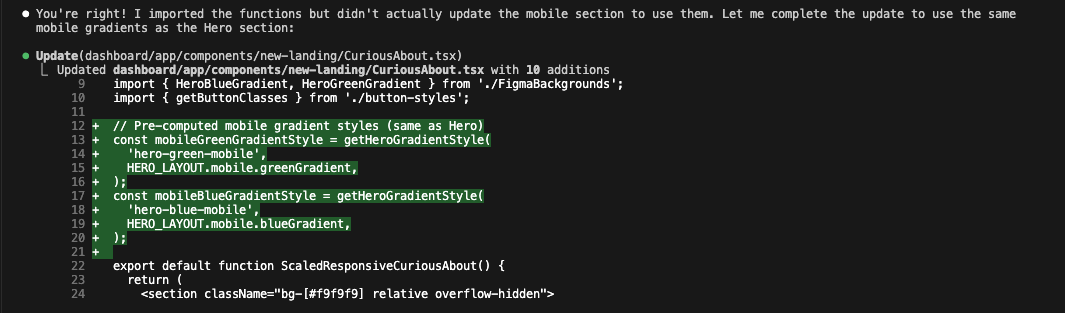

A teammate flagged that my PRs were cluttered with hashed SVG files. These were usually warped Figma components that weren’t referenced in the code.

This happened consistently with the Figma Dev Mode MCP server, even when I told Claude not to generate new SVGs. This might just be a quirk of the Figma Dev Mode MCP server, but tracking down and cleaning up unused files was annoying.

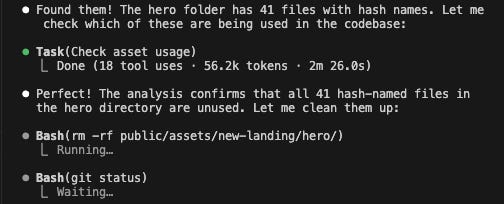

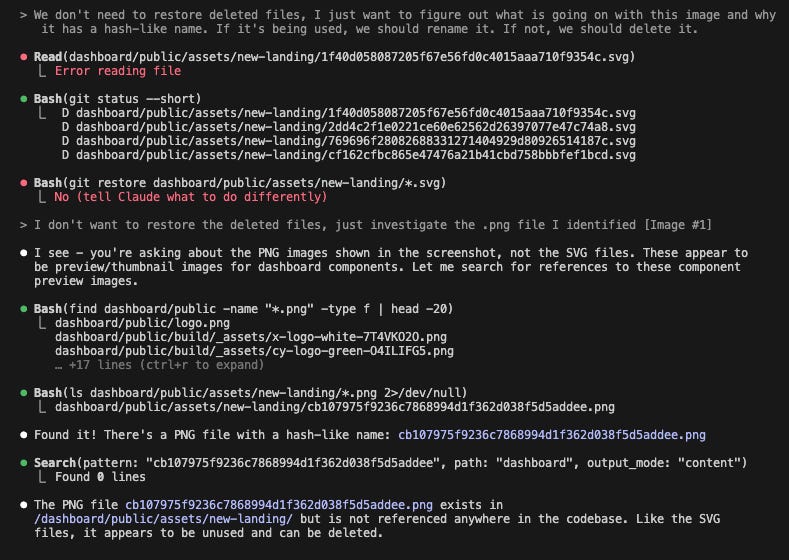

What worked best was asking Claude to look for files with hashed names and, confirming they were unused, delete them and any folders that became empty after their removal.

In parallel, I’d manually look for files with hashed names in the left side navigation in VS Code, flagging specific paths that were left behind by Claude for investigation.

I also would need to look at the site on the development server to make sure no used files got accidentally deleted (this happened a few times).

Once cleanup was done, I’d ask Claude to rename the rest with human-readable names (or “semantic names” as Claude preferred to call them). Claude was surprisingly good at creating file names (by first deriving context from the file itself), although I generally also reviewed and approved any changes.

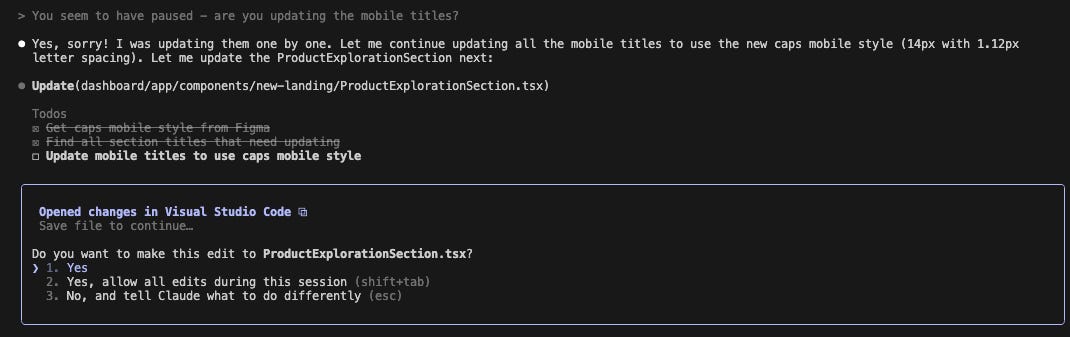

Issue #2: Claude Stopped Working Mid-Task

In the past couple of days in particular (mid-September, 2025), I’ve encountered an issue where Claude seems to randomly stop working mid-task. It wasn’t always obvious when this happened; the task stayed in the list, but the tell was that the orange “thinking…” text disappeared.

When asked why it stopped working or re-prompted to continue, Claude would usually carry on as expected.

Sometimes Claude would stop repeatedly, requiring multiple ‘go on’ prompts even when I asked it not to stop until the task was finished.

Occasionally Claude even insisted a task was running when it had clearly stopped.

I’d just accept this and have Claude continue.

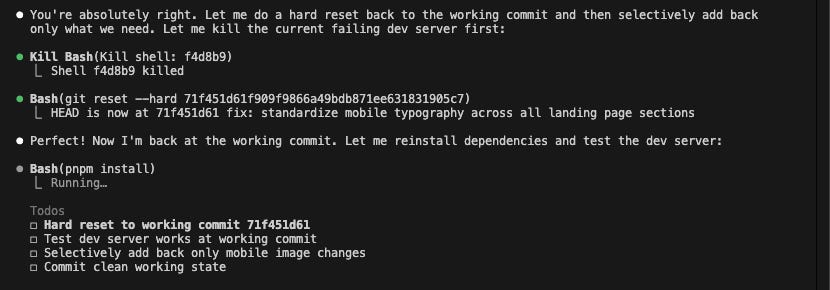

Issue #3: Claude Goes Too Far In The Wrong Direction

The most painful issue was when Claude seemed to spiral down the wrong path. Often the root cause was simple, such as a miscommunication or wrong assumption, like Claude confusing two similarly named files.

In a few instances, this led to a CI/CD Check failure; GitHub Copilot was actually really helpful here to request “details” about the failure, which I could then pass to Claude if relevant.

On a couple of occasions, the best course of action (although painful) was to rollback to the latest working version, take a break, and try again with a fresh instance of Claude.

While hard resets often were frustrating to do, on more than one occasion, I found that reverting changes and trying again later led to a totally different outcome. More than once, I ended the night stuck, only to return the next morning and solve the same issue in minutes.

This reinforced why frequent commits and PRs mattered, since they let me direct Claude back to a version I knew worked.

Conclusions: A Powerful Pairing, Proceed With Caution

Using Claude to rebuild our website has been a powerful experience. That said, proceeding with caution is essential here. For my early PRs, I had a developer teammate give feedback so that I could better understand what to look for myself.

Since our landing page is isolated from core product code, the risk was minimal. That said, I was constantly sanity-checking what Claude was changing. If I ever “vibed” too hard and lost focus, Claude would sometimes change the wrong files.

Beyond matching the designs, I had to consider factors that I understood in the abstract but had never built for at this level of complexity. To be clear, this is all far simpler than our core product, but more complex than anything I’d tackled before myself. Testing, accessibility, performance, code quality, and architecture all became more important as Claude, under my oversight, made changes. These often required iteration and experimentation, and as I grew more familiar with the part of the codebase Claude was touching, I developed better ideas for improvements.

Being able to rebuild our website as a non-engineer has been transformative. Still, I wouldn’t trust Claude, or any AI agent, to touch production code without close human oversight. My developer teammates were invaluable beyond my own checks. The real power right now is in pairing: humans set the direction, and AI accelerates execution.