From Prompts to (Almost) Production: Choosing the Right AI Tools for a Startup Website Refresh

Testing editors, CLIs, and MCP servers to see how far AI workflows can go in real-world development to rebuild our startup’s website

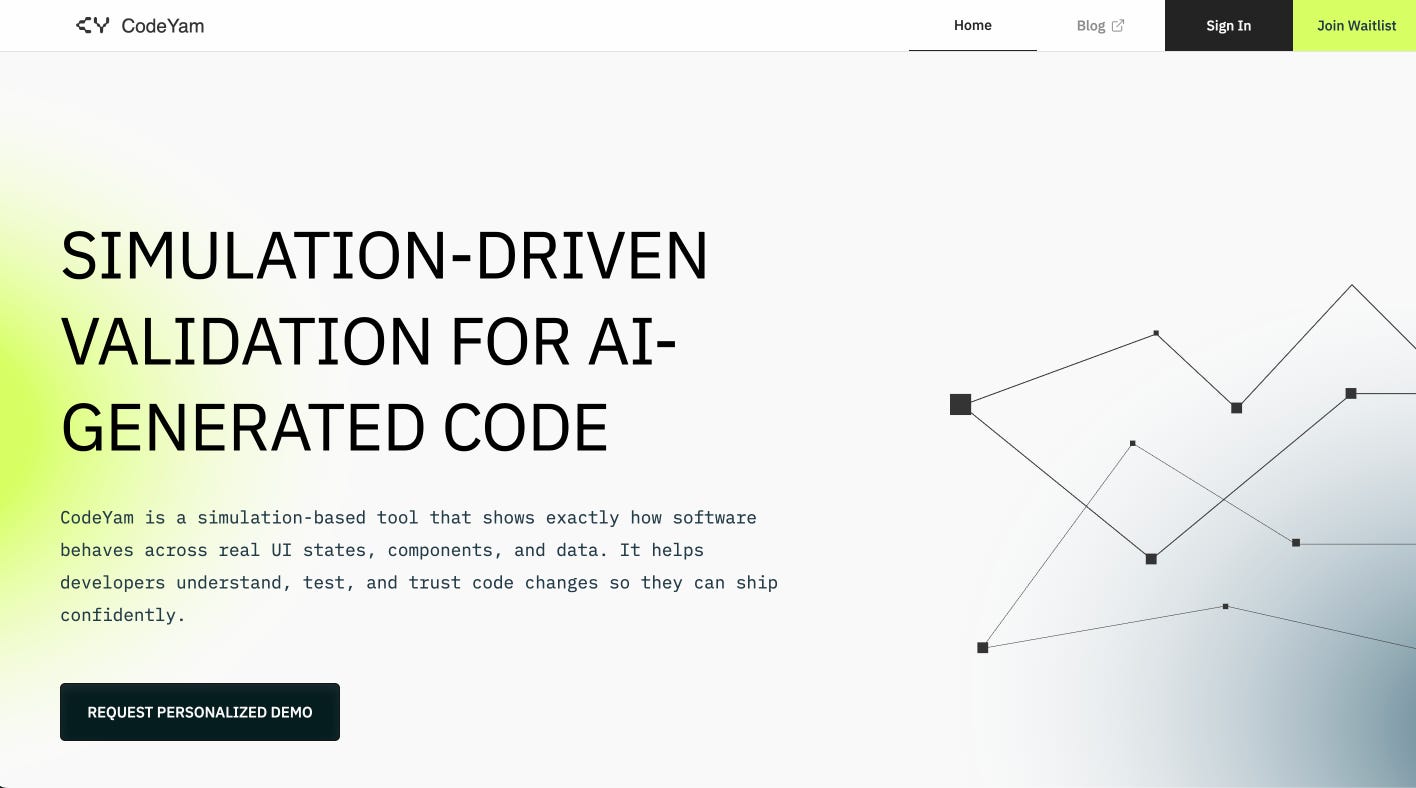

AI is changing how software gets built. Over the past few months, I’ve been experimenting with vibe coding and agentic coding (I define both here). What started as throwaway projects, like a task tracker or wellness dashboard, has grown into (almost) production-ready work, including rebuilding our startup CodeYam’s website.

Initially, through vibe coding, I built small software projects entirely from natural language prompts without editing or writing code. These were very simple, relying heavily on vibe coding with easy integrations for the auth/database via Supabase and to GitHub to deploy. See an example I shared on X earlier this year here.

Over time, I began blending vibe and agentic coding to progress beyond prototypes and into simple, shippable apps. A recent example: I built a couple of personal website variants (site one and site two) and moved this blog onto its own domain.

Tackling a New AI Development Challenge: Our Startup’s Website

Recently I had the opportunity to apply my vibe and agentic coding skills to something different. We had designs ready for a refreshed landing page for our startup CodeYam and wanted to get these shipped as soon as possible. At the same time, our developers needed to stay focused on our top priority of improving the core product. Rather than add the landing page to their workload, we decided to see if I could handle the website changes myself.

This felt different from starting fresh with a vibe or agentic coded project. It seemed much closer to a traditional web development workflow, but with AI tools integrated into the process.

Tooling Snapshot

AI vibe and agentic coding tools evolve quickly, so consider this section a snapshot of my experience only and not guidance for future tooling decisions.

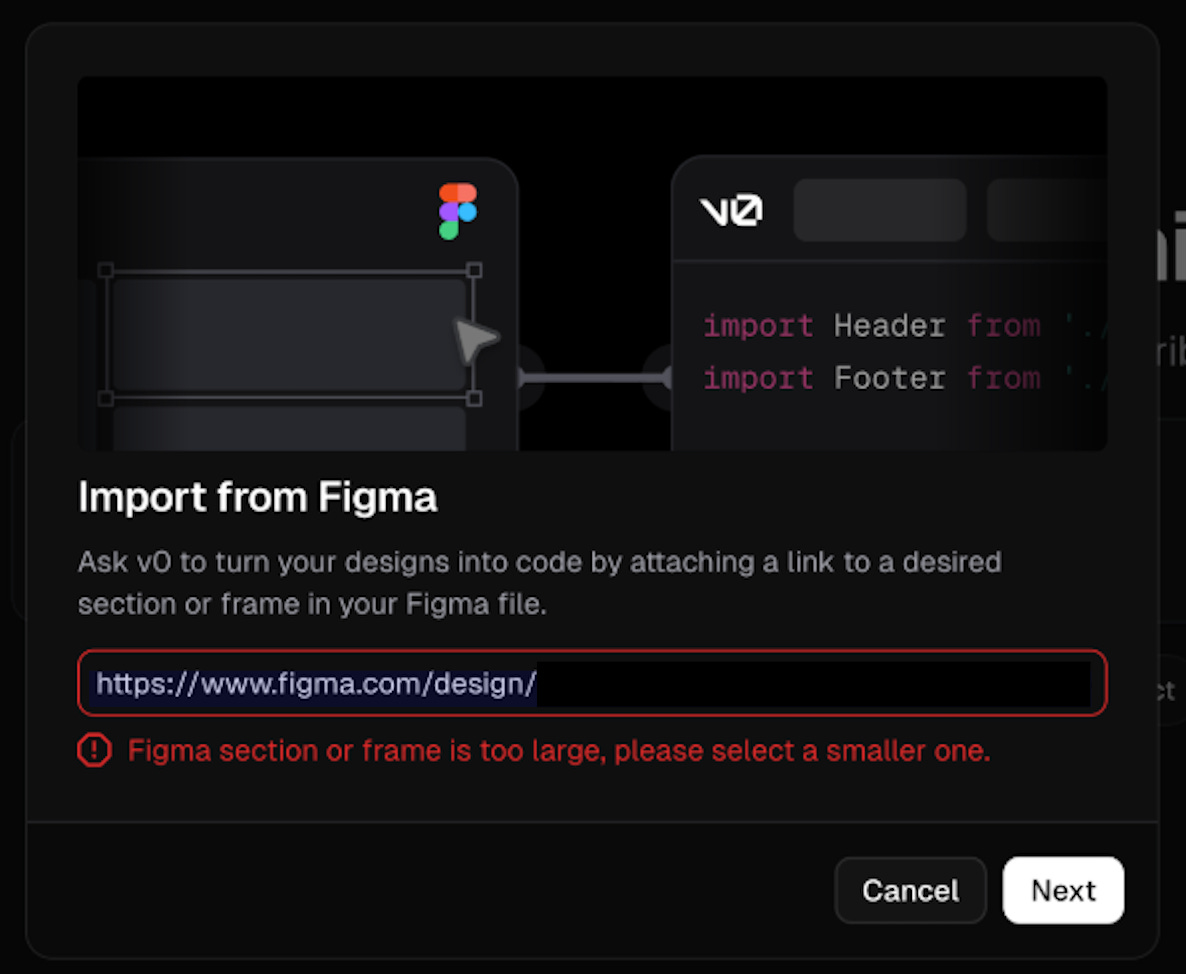

For greenfield vibe coding when there’s no existing designs or code repository, I often start with v0 by Vercel. Since our designer had the new landing page in Figma, I tried v0’s “Import from Figma” feature. Unfortunately, I repeatedly hit errors importing the large and complex files and frames and had to abandon this approach.

Since my usual flow of vibe coding followed by agentic iteration would not work, I shifted into a full agentic workflow. I tested four editors:

Windsurf (recently acquired by Cognition)

I chose VS Code for its effective integrations with the agentic tools I wanted to use. I have heard great things about Cursor from engineers using code complete and Windsurf from engineers working with detailed specs and/or agent instructions, but neither felt quite right for me for this project. Zed is newer but feels like one of the first editors designed AI-first, and I am excited to see how it evolves but it did not feel as robust yet for the integrations I needed.

Comparing CLIs

I also tested several Command Line Interfaces (CLIs) and Model Context Protocol servers (MCPs). On the CLI side, I tried:

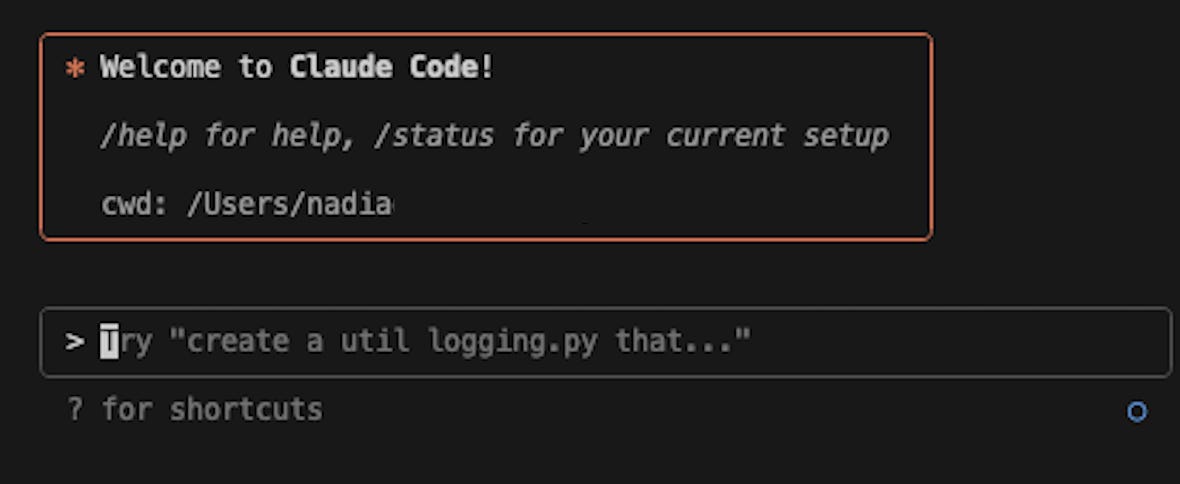

Claude Code by Anthropic

Gemini by Google Cloud

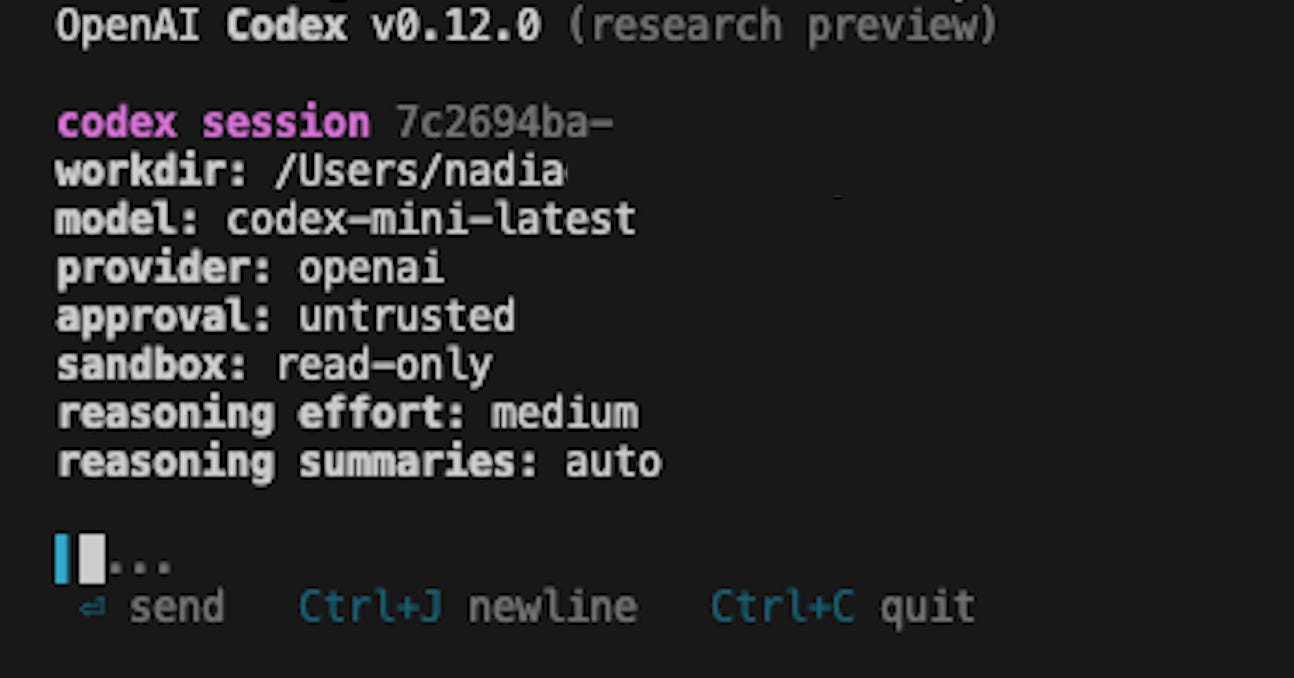

Codex by OpenAI

GitHub CLI by Microsoft (used primarily by AI agents directly)

I did not expect much variance, but these experiences felt surprisingly different.

Claude Code stood out for usability and felt intuitive even for me as a non-developer. Codex flooded my terminal with data I could not parse. Gemini sometimes strobed as it processed, which created an uneasy visual effect. I expect all of these tools will continue to improve, but for this landing page project I relied primarily on Claude Code’s CLI.

The GitHub CLI was used in conjunction with Claude Code’s CLI, rather than separately (I used Claude Code’s CLI, and Claude as an agent used GitHub’s CLI at times).

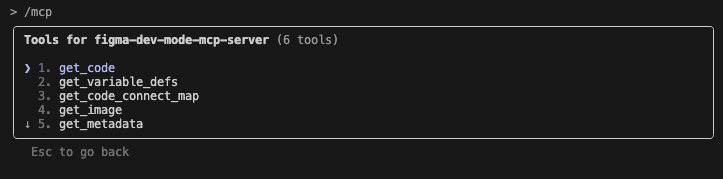

Model Context Protocol Servers (MCPs)

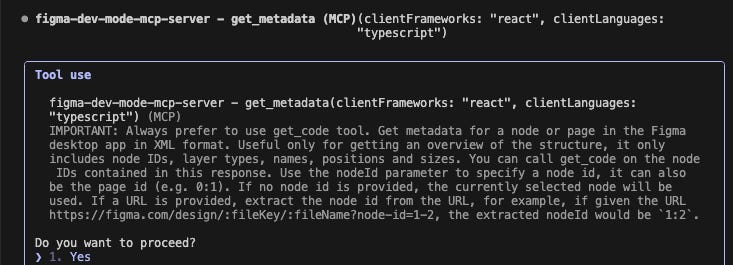

Alongside VS Code and the Claude Code and GitHub CLIs, I primarily used:

I also attempted to use Figma Code Connect but abandoned this after running into setup issues. The Dev Mode MCP Server (Beta) was simpler and effective enough for my needs.

Within Claude, I tried creating my own agent, an AI design engineer with Figma MCP superpowers. However, I often found that the default agent experience worked better than my custom agent (this was my first attempt to create an agent of my own within Claude though).

In the past, I’ve used Cline via a VS Code extension but did not find it necessary for this project.

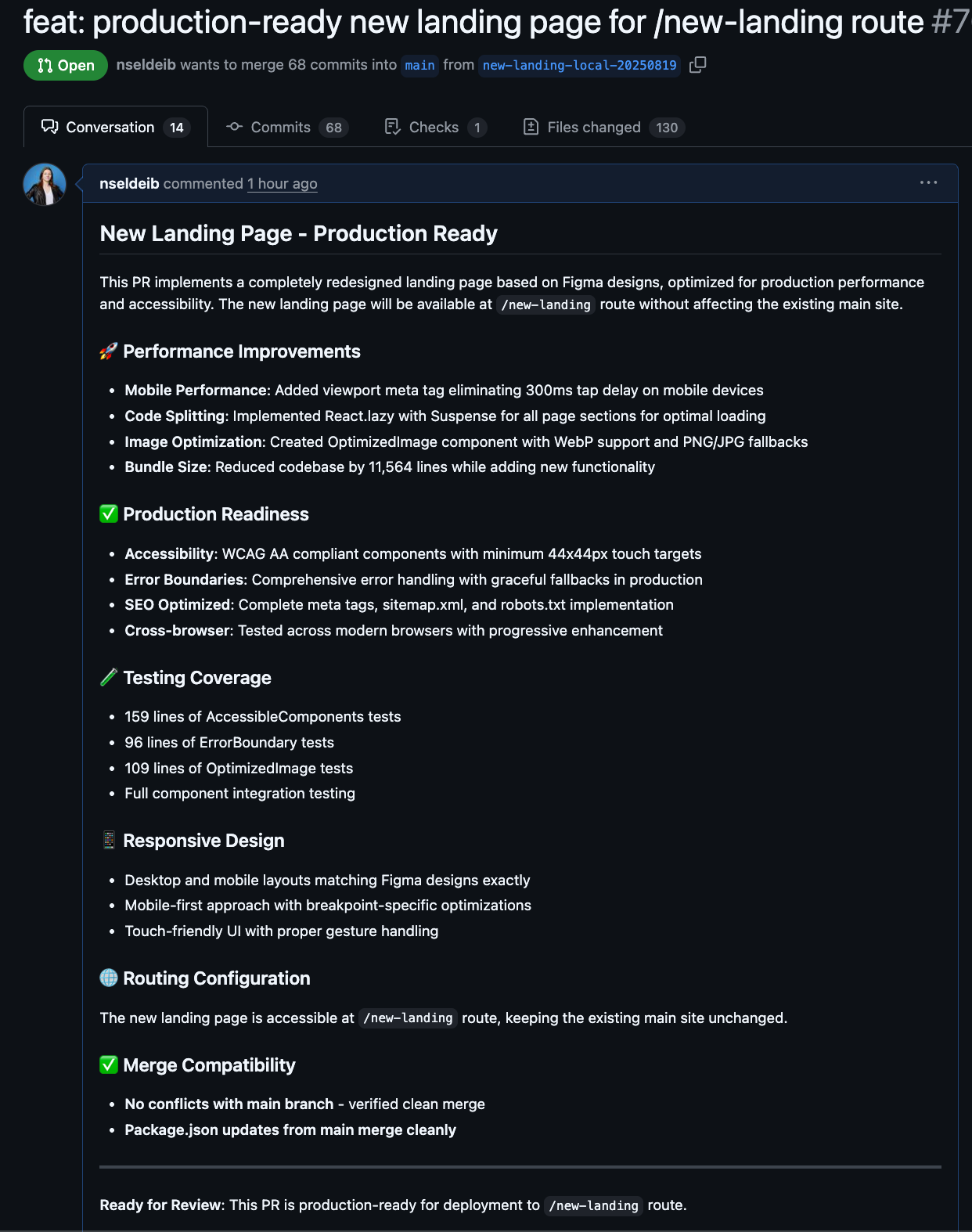

There were a number of quirks in how the Figma Dev Mode MCP Server (Beta) worked, both alone and in combination with Claude Code. This led to occasional connection errors and/or odd outputs, but eventually (and after lots of experimentation and iteration) I was happy enough with the outcome to open a pull request to merge my changes into our main development branch.

Takeaways

This experiment showed me that agentic coding isn’t just for prototypes or side projects. It can enable non-developers to take on production work that once required a dedicated engineer. That said, I wouldn’t yet trust these tools outside of siloed, low-risk contexts. That said, the progress is remarkable. With how quickly the tools and workflows are evolving, I’m excited about how more non-developers might be able to confidently interact with and build useful code.

For now, though, humans remain essential. We’re still heavily reliant on human review and approval of agent-assisted changes. Ultimately people, not AI agents, are and must remain responsible for the quality and safety of their product and code.

For now, it’s back to building. My (human) colleague reviewed and merged my pull request yesterday. Now Claude Code and I are pairing up again to get additional site improvements into merge-ready shape.

The new landing page will live in its own dedicated space, where our designer and I will test before switching fully to production. As I make progress, I plan to share more about how I combine these tools and what I am learning as we get closer to launch.