Vibe Coding vs. Agentic Coding

Defining two distinct patterns in AI-assisted software development

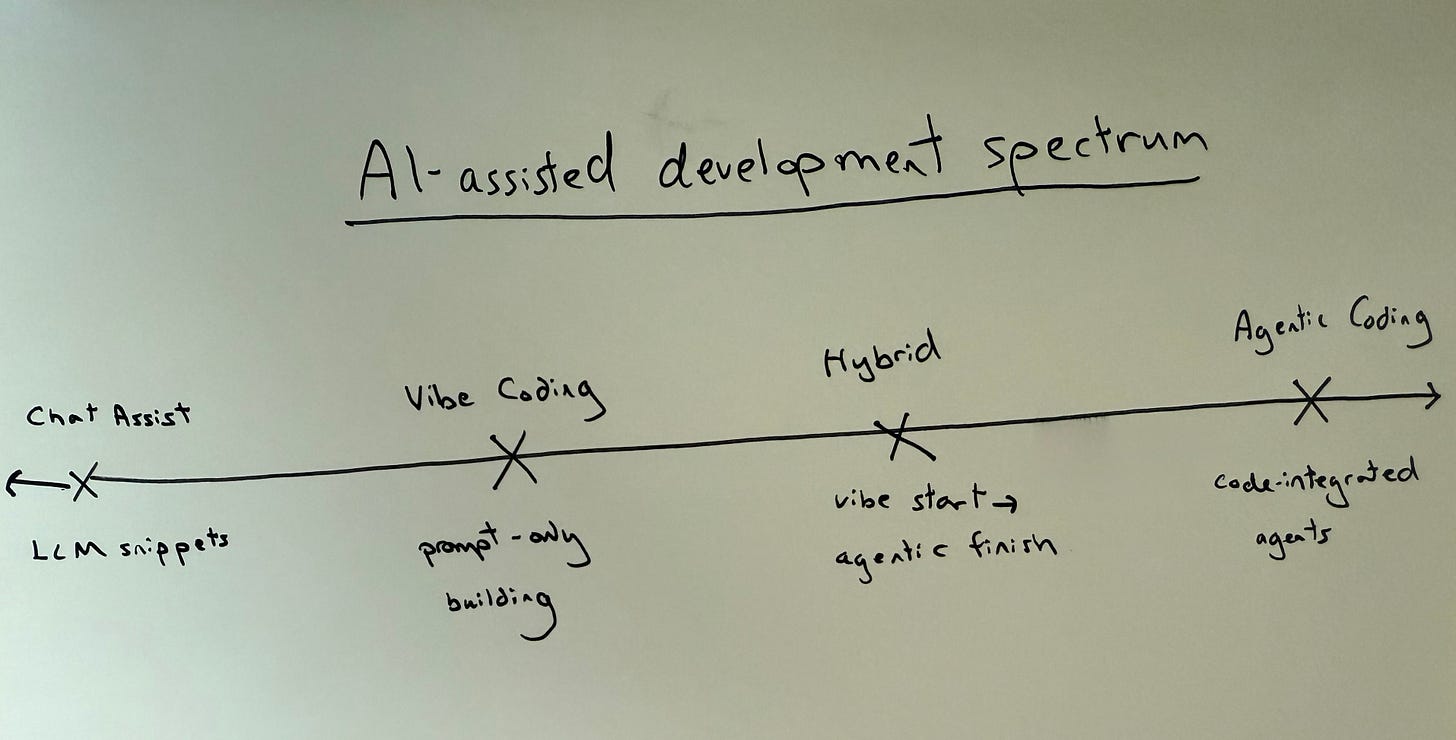

AI has changed how many of us build software, and led to a number of new promising tools and processes that are rapidly and continuously evolving. Sometimes, you describe what you want and the AI spins up an entire app. Alternatively, AI agents will work alongside you in your repo, editing files and refactoring code as if it were a developer teammate. While both of these are technically AI-assisted software development, their nuances are quite different.

At CodeYam, our team noticed we were using the term “vibe coding” without a shared definition, which was pretty confusing. Once we defined “vibe coding”, we realized there was another distinct pattern of using AI for software development that we now call “agentic coding.” Naming this distinction has helped us better explore and understand different AI-native tools and approaches for software development.

Vibe Coding

Vibe coding is building software entirely through a natural-language interface, without reading or editing the underlying code. You describe what you want, the AI builds it, and you interact only through prompts and high-level configuration.

Typical traits:

No direct code manipulation

Iteration happens through prompting

Often starts from a blank slate or a boilerplate template

Common vibe coding tools: v0 by Vercel, Lovable, Figma Make, Bolt.new, GitHub Spark, Firebase Studio, Base44 (and there are many others).

You might still connect to services like Vercel (for deployment), GitHub (for version control), or Supabase (for database/auth), but you are not inspecting diffs or reasoning about the code’s architecture.

Vibe Coding Strengths:

Very fast idea-to-prototype turnaround

Low barrier for non-developers or early experimentation

Feels like an evolution of no-code tools, with fewer rigid constraints

Vibe Coding Constraints:

Not ideal for granular changes or debugging

Less visibility into technical debt or maintainability

Harder to ensure production-grade quality or security

Agentic Coding

Agentic coding is when AI agents directly interact with your codebase, often with repo access, editor integration, or CLI commands. You still have visibility and control, but the agent accelerates your work, edits multiple files, and can handle multi-step workflows.

Typical patterns:

Pair programming agents (e.g., Devin by Cognition, GitHub Copilot)

Full editor replacements (Cursor, Windsurf, Zed)Editor extensions (Anthropic’s Claude Code in VSCode, Cline)

CLI-based agents (OpenAI Codex CLI, Gemini CLI, Anthropic’s Claude Code CLI)

Agentic tools often integrate with MCP Servers to pull information from other parts of the toolchain. For example, Figma Dev Mode MCP Server (Beta) can pass design specs into your coding environment and GitHub MCP Server can surface PR data or repo context.

Agentic Coding Strengths:

Fits into established engineering workflows

Enables targeted changes, refactors, and bug fixes

Better for maintaining long-lived codebases

Agentic Coding Constraints:

Higher learning curve than vibe coding

Requires some technical fluency to be effective

Speed gains depend heavily on integration depth and agent quality

What About Just Using ChatGPT or Claude to Write Code?

There is also a lighter-weight style of AI-assisted development that is neither vibe coding nor agentic coding. This is when you use a general-purpose LLM like ChatGPT, Claude, or Gemini to generate or explain code or a spec, which you’d then paste manually into your editor (for code) or into a vibe or agentic coding tool (for a spec).

How it works:

You describe what you need in a chat window

The model returns code snippets, specs, or explanations

You copy them into your project or tool and adapt as needed

Why is this different? When compared to…

Vibe coding: you still work in your own environment and see the code directly

Agentic coding: the AI has no persistent repo context or direct integration

When it’s useful:

Writing prompts or specs that you later use for vibe or agentic coding

Small utility scripts or isolated functions

Learning a new framework or API

Getting unstuck on syntax or logic errors

Early-stage exploration before deeper integration

Constraints:

No persistent context across files or sessions

Manual integration means more chances for mismatches

Slower for multi-file or large-scale changes than agentic tools

For many, this “chat assist” approach is the most common entry point into using AI for development. It has almost no setup cost and works with any stack, but it does not match the speed or automation of fully agentic workflows.

Choosing the Right Approach

As a founder and former product manager who is learning to code, I have found that for:

Greenfield projects: Vibe coding is the fastest way from idea to prototype.

Existing codebases: Agentic coding is better for precision, quality, and maintainability.

Hybrid workflows: Start with vibe coding for speed, then move to agentic tools for production readiness.

Today, most experienced developers and non- or less-technical builders I know, including myself, prefer a fairly similar stack: Claude Code in the CLI + VSCode or Cursor, with MCP Server integrations where possible.

For example, I am currently working on our CodeYam landing page website refresh and have been testing Claude Code with the Figma Dev Mode MCP Server (Beta) to translate designs into TypeScript/Remix. This experiment has shown me where these workflows work well and where they still fall short, especially when translating complex design files into code.

Why The Distinction Between Vibe and Agentic Coding Matters

The line between vibe coding and agentic coding is already starting to blur. Tools like v0 hint at hybrid models where you might start with a prompt-driven build and then transition into a more agentic refinement loop.

For builders, the key is knowing which approach fits the job. Vibe coding excels at rapid prototyping and greenfield projects. Agentic coding shines when precision, maintainability, and production readiness matter. Many developers end up mixing both, starting with speed and moving toward control.

This is still a new framework, and I am continuing to test how I use AI to help build software on various projects. I will share more from my experiments soon. In the meantime, if you have your own working definitions or see the split differently, I’d love to hear your perspective.